How Edge AI is Changing Smart Devices

What is Edge AI?

Edge AI brings intelligence closer to where data is created inside devices instead of faraway cloud servers. Think of a smart camera detecting motion in milliseconds without sending footage to the internet, or a wearable analyzing your heart rate in real-time during a workout. That local processing is the magic of Edge AI.

Unlike traditional AI, which uploads data to a cloud for decisions, Edge AI performs inference on-device or near-device. That means fewer delays, stronger privacy, and greater reliability. In a world where people expect instant responses and data-sensitive industries avoid cloud bottlenecks, Edge AI isn’t just a trend; it’s becoming a default architecture powering modern smart ecosystems.

Definition & Concept

Edge AI refers to running AI models locally on smart devices or edge nodes instead of centralized cloud servers. It prioritizes on-device inference, meaning raw data stays closer to its source while insights and actions happen instantly.

Key Terminologies Explained (Edge Device, Edge Node, Edge Network)

Edge devices are endpoints like cameras, sensors, phones, or wearables. Edge nodes are small local servers or gateways that help process heavier tasks. Edge networks link these endpoints with minimal latency before data ever needs cloud support.

Edge AI vs Cloud AI Key Differences

The biggest difference between Edge AI and Cloud AI is location and speed. Cloud AI relies on internet connectivity and centralized compute, which adds latency and raises privacy concerns. Edge AI flips that model by embedding optimized AI directly into hardware or local gateways.

Cloud AI excels at training large models, but inference, the moment a device makes a decision, can slow down if every action depends on cloud communication. Edge AI enables real-time execution, preserves bandwidth, and keeps user data private. As smart devices grow widespread in homes, hospitals, factories, retail stores, and cities, the “cloud-only AI” approach starts feeling outdated for day-to-day device decisions.

Latency & Real-Time Comparison

Cloud AI takes extra milliseconds or seconds to respond due to network travel time. Edge AI processes data locally, delivering results fast enough for real-time automation, video analytics, voice recognition, and instant device behavior.

Privacy, Security & Data Processing Differences

Cloud AI transmits raw data over the internet, increasing exposure risk. Edge AI secures data locally inside devices, enabling sensitive industries like medical tech or industrial monitoring to stay compliant without compromising intelligence.

Why Edge AI Matters for Smart Devices

Smart devices are evolving from simple automated tools into intelligent decision-making systems. Modern consumers expect devices to react instantly, not after a delay. Developers want lower compute costs and fewer API-dependent failures. Businesses want deeper analytics without risking data leaks.

Edge AI solves all three by making AI native to the device layer. This architecture allows motion-vision processing, contextual automation, predictive insights, voice responses, and personalization to run without heavy cloud reliance. As billions of IoT endpoints scale globally, Edge AI matters because it makes smart devices fast, secure, efficient, and future-proof, and these factors strongly influence product adoption in both consumer tech and enterprise device markets.

How Edge AI Works: End-to-End Technical Workflow

Edge AI models are first trained in powerful environments, often using large cloud GPUs. Once trained, they go through compression and optimization, like pruning, quantization, and conversion into lightweight formats. The optimized model is then deployed onto edge hardware such as mobile chips, AI accelerators, or embedded modules.

When data is generated, camera frames, audio signals, and sensor input, it is instantly processed through the model for inference locally. If a task overwhelms local resources, hybrid fallbacks can ping the cloud, but only for secondary support. The workflow’s goal is simple: keep intelligence local for speed, and cloud optional for scale.

Model Training → Edge Deployment Steps

The model is trained, optimized for efficiency, packed into a deployable runtime, and embedded into hardware-friendly formats before being installed into edge chips, IoT gateways, mobile firmware, or AI edge modules.

Local Data Processing vs Cloud Fallback Model

Devices prioritize inference locally. Only metadata, alerts, or summaries are sent to the cloud if needed. Cloud fallback is used selectively, ensuring speed isn’t sacrificed, and bandwidth isn’t constantly consumed.

Core Edge AI Enabling Technologies

Edge AI relies on smart hardware, optimized model design, local inference engines, Tiny ML architectures, neural processing units (NPUs), computer-vision chips, embedded Edge AI modules, and fast local data buses. Supporting layers include 5G edge networking, ML libraries, lightweight AI frameworks, and optimized model runtimes such as on-device interpreters and accelerator SDKs. These technologies ensure AI can run efficiently inside batteries, sensors, and low-memory boards without overheating or slowing down operations.

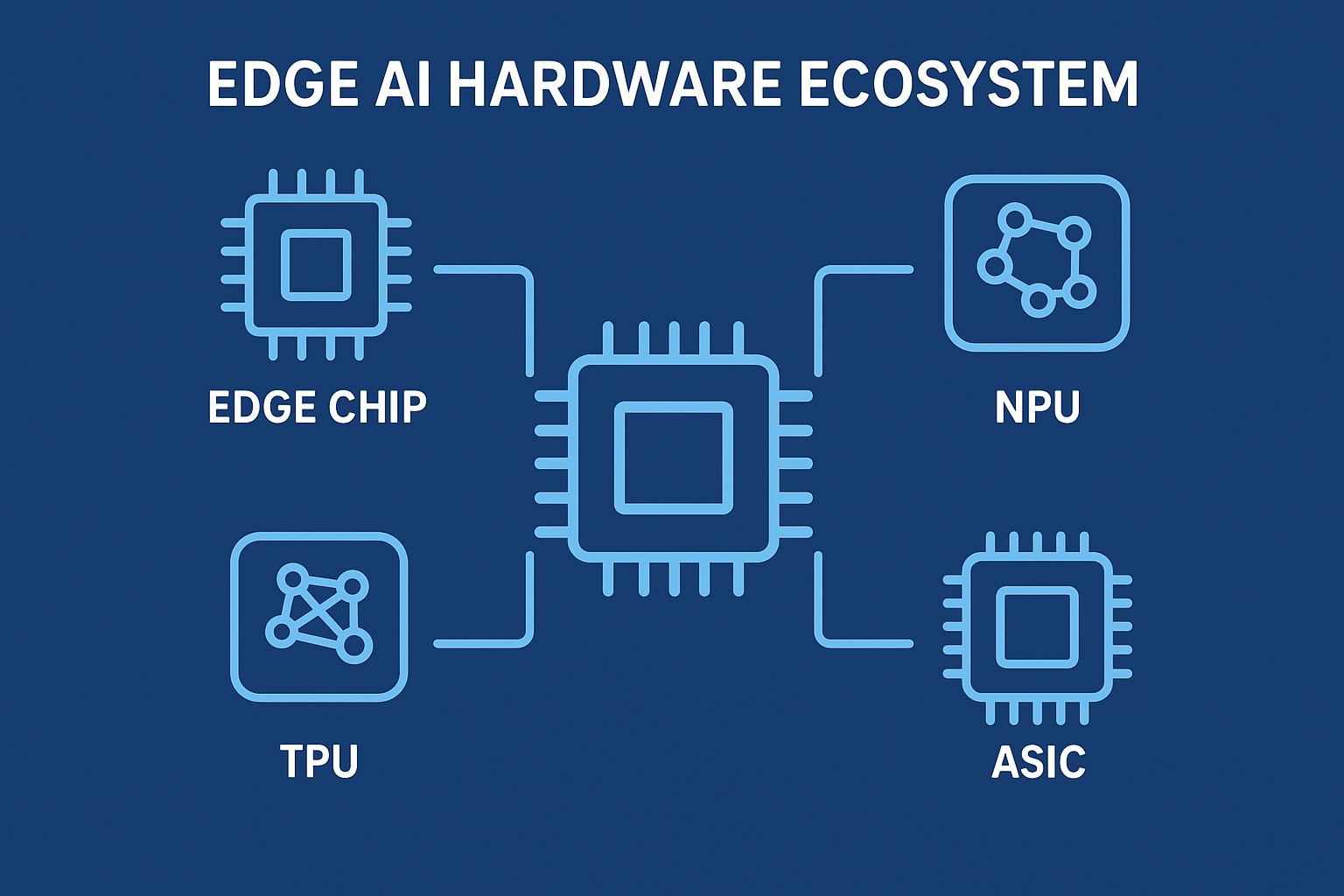

Edge AI Hardware Ecosystem (Edge Chips, NPUs, TPUs, etc.)

Edge AI thrives on specialized silicon. Popular hardware includes embedded AI accelerators, mobile-system-on-chip units, neural processing cores, and USB-based AI inference modules.

Chips are optimized for inference, not heavy training, to reduce heat, energy drain, and latency. Some architectures provide built-in model optimization, while others offload AI via attached edge modules. Developers and businesses leverage these chips to embed AI features directly into device firmware, enabling seamless smart behavior.

You Might also Like AI Agents vs Traditional AI Tools

Examples of Edge-Optimized AI Chips

Examples include Neural Processing Units found in mobile SoCs, embedded vision inference chips, Tiny ML silicon for micro-sensors, robotics-ready edge compute boards, and 5G-connected hardware inference gateways.

Embedded AI Accelerators in Consumer Devices

These accelerators are small, low-power AI modules that process video, voice, and sensor inputs inside consumer gadgets like smart home devices, phones, drones, IoT hubs, wearable rings, and smart audio speakers.

Real-Time Local Inference & Faster Decision Making

Edge AI decisions happen instantly. A smart thermostat doesn’t need cloud approval to adjust temperature. Your doorbell cam doesn’t buffer video to identify a visitor. A wearable doesn’t upload every heartbeat count. All inference happens inside the device layer using optimized local AI engines. That real-time response creates a better user experience and opens doors for automation requiring immediate triggers.

Ultra-Low Latency for Smart Devices

Latency drops when distance drops. Edge AI eliminates cloud round-trips by enabling AI decisions locally. This is crucial for use cases like video object-tracking, emergency alerts, voice commands, face detection, anomaly recognition, autonomous routing, industrial vision QC, robotic automation, biometric readings, and instant pattern detection across sensor clusters.

Enhanced Data Privacy & Security at the Edge

Edge AI keeps data local. A hospital camera or personal wearable shouldn’t broadcast raw patient data to the internet every second. With Edge AI, only meaningful insights not raw inputs, are transmitted. This strengthens data compliance, reduces risk exposure, and builds consumer trust at scale.

Reduced Bandwidth & Cloud Dependency

Cloud communication consumes massive bandwidth when millions of devices send raw data for every decision. Edge AI avoids that by transmitting only compressed outputs, summaries, or flagged alerts. Bandwidth drops, connectivity failures drop, and uptime rises, making device infrastructure efficient and sustainable.

Cost & Power Efficiency Gains

Edge AI isn’t just faster, it’s cheaper long-term. Devices reduce cloud compute consumption. Local inference uses dedicated accelerators requiring minimal battery, lowering infrastructure cost and power drain. That efficiency creates better margins for product deployments and enterprise device AI scaling.

Offline AI Capabilities for Smart Devices

Edge AI allows intelligence to run even without the internet. This is critical for remote industrial sites, rural healthcare monitoring, security devices, warehousing automation, and consumer gadgets in low-connectivity homes. Offline AI creates resilience that other architectures can’t guarantee.

Edge AI in Smart Home Devices

Edge AI is turning ordinary smart home products into proactive companions rather than reactive machines. Devices like AI-powered cameras, voice assistants, smart doorbells, and home hubs now analyze surroundings instantly without relying on cloud back-and-forth. Local inference enables features such as real-time visitor identification, suspicious activity detection, adaptive lighting, personalized voice recognition, and predictive thermostat adjustments.

This localized intelligence ensures faster reactions, reduced bandwidth use, and higher reliability in environments where connectivity may dip. As smart homes grow more complex with dozens of connected endpoints, Edge AI becomes the orchestration layer that keeps personal data inside the home while delivering instant automation, making households smarter, safer, and more responsive than ever.

On-Device Automation Examples

Edge AI allows home devices to perform automation instantly: doorbell cams detect packages or people, speakers authenticate voices locally, and smart hubs trigger actions without needing the internet for each micro-decision.

Personalized Home Routines Powered by Local AI

Local AI profiles resident behavior, enabling routines like lights adjusting by presence, thermostats predicting comfort levels, and assistants responding differently to each user’s local voice signature.

Edge AI in Wearables & Personal Gadgets

Wearables are where Edge AI shines the brightest because the value is in instant insights and intimate data protection. Devices such as smart rings, fitness bands, and earbuds now run micro-models locally to interpret biometric patterns, ambient context, motion data, and voice requests in real-time.

On-device inference powers heart rhythm anomaly tagging, workout form correction, AI noise cancellation, adaptive audio modes, sleep cycle predictions, fall detection alerts, and health trend patterning all without streaming raw data to the cloud. For users, this means lower battery drain, stronger privacy, and faster feedback loops.

For brands and developers, it creates product differentiation by offering intelligence that feels immediate, personal, and dependable, even when offline.

Real-Time Health Monitoring & Alerts

Edge AI processes heart rate, motion, and sleep biometrics instantly, flagging anomalies and sending only summarized alerts rather than raw data, improving response time and data compliance.

Context-Aware Personal Features

Wearables use local AI to understand context (running, commuting, resting) and auto-adjust experiences like audio profiles, mic modes, and fitness tracking granularity without cloud triggers.

Edge AI in Smartphones & Consumer Devices

Phones have quietly become the largest edge AI ecosystem in the world. From real-time translation to computational photography, smarter keyboards, voice isolation, local assistants, and on-screen insights, Edge AI features are embedded into everyday usage moments.

Advanced chips inside mobile hardware handle inference for face recognition, object extraction from video, generative photo improvements, local language processing, fraud call detection, and AI-based network switching, all occurring natively inside the device OS layer. This approach not only improves user experience but also reduces backend compute cost for brands deploying intelligent mobile features. The era of the “AI-dependent cloud phone” is fading; the future belongs to phones that think, adapt, and secure data at the point of interaction.

Edge AI in Healthcare Smart Devices

Healthcare devices are adopting Edge AI because real-time detection can be the difference between early intervention and missed insights. Products such as medical imaging tools, patient monitoring sensors, AI stethoscopes, smart glucose readers, and bedside cameras now run optimized models locally to detect anomalies, tag risky patterns, and push only critical insights to secure dashboards.

Local inference reduces latency, protects patient privacy, and supports offline operation in remote or emergency environments. Hospitals benefit from smoother bandwidth scaling, while wearable medical devices gain battery efficiency and regulatory compliance advantages. Edge AI isn’t replacing clinicians; it’s accelerating visibility, improving signal precision, and creating faster, safer diagnostic loops.

Edge-Powered Medical Sensors & Diagnostics

Edge AI medical sensors log inference locally for readings like respiration, ECG anomalies, glucose spikes, or cough irregularities, transmitting only flagged insights for clinician review.

Medical Imaging & On-Device AI

Local AI assists imaging devices by pre-processing scans, detecting irregular tissue flags, and reducing cloud storage or transfer burden while enabling faster diagnostic triage.

Edge AI in Industrial Smart Devices (Manufacturing & Automation)

Edge AI is fueling the next wave of industrial transformation by embedding intelligence inside factories rather than routing decisions through distant servers. Local AI vision systems perform quality inspection, detect production anomalies, monitor worker safety, interpret mechanical behavior for predictive maintenance, and trigger automated shutdowns if risk signatures spike.

Sensors pushed into edge gateways analyze vibration, sound, temperature, pressure, and optical signals in real-time. This enables operational continuity even in low-connectivity zones, while reducing cloud infrastructure cost for industrial AI workloads. Businesses benefit from faster production cycles, smarter robotics, fewer defects, lower downtime, and cost-efficient scaling.

You Might also Like Milyom

Computer Vision for Quality Inspection

Local AI vision systems inspect thousands of units per minute, detecting surface defects, color inconsistencies, alignment mismatches, and production anomalies without manual intervention.

Predictive Maintenance at the Edge

Edge AI interprets machine sound, vibration patterns, and thermal signals locally to predict breakdowns, reducing unplanned downtime and repair costs.

Real-Time Safety & Anomaly Detection

Sensors and vision nodes trigger instant hazard alerts, detecting smoke, gas leaks, unauthorized access, risky worker proximity to machines, or hardware anomalies as they happen.

Edge AI in Retail Smart Devices & Smart Stores

Retail needs instant intelligence, and that’s why Edge AI is growing fast in smart stores. Smart shelves detect inventory gaps, local CCTV tags suspicious activities, POS devices run fraud detection, beacons deliver personalized recommendations, and edge hubs optimize foot-traffic understanding.

Instead of sending hours of video to the cloud, stores process object recognition, people analytics, product movement, and behavioral tagging locally, sending only relevant summaries. This reduces bandwidth cost, strengthens customer privacy, and keeps operations real-time.

For brands in retail tech, edge inference unlocks better sales outcomes, higher retention, and more scalable in-store AI deployments.

Smarter POS, Shelves & Customer Analytics

Edge AI POS devices run local fraud checks, smart shelves analyze stock levels visually, and camera nodes summarize customer movement for real-time restocking or insights.

Edge AI in Smart Cities & Public Infrastructure

Cities are deploying Edge AI into traffic systems, public transport nodes, safety cameras, water sensors, environmental monitors, lighting infrastructure, and edge connectivity hubs. Local AI helps traffic flow decisions, detects public safety anomalies, interprets waste management signals, analyzes weather shifts, and orchestrates device automation across physical infrastructure.

The intelligence doesn’t constantly hit the cloud, which keeps cities scalable, cost-efficient, and resilient even during network congestion. Smart cities of the future will run on AI that operates at intersections, street corners, sensor nodes, transit edges, and gateways, not just centralized cloud dashboards.

Traffic & Public Safety Edge Examples

Edge AI traffic cameras optimize signal timing locally, pollution sensors run inference near intersections, and public safety nodes flag anomalies before cloud escalation.

Edge AI in Transport, Mobility & Edge Devices

From smart bus chips to road cameras and mobility hubs, Edge AI is enabling real-time transport intelligence. It supports collision avoidance tagging, object tracking on roads, instant lane anomaly detection, sensor-based routing suggestions, and local vision inference inside urban hardware. This allows transport networks to respond instantly instead of waiting for cloud decision loops, which adds delays.

Edge AI in Energy, Smart Grids & Utilities

Energy infrastructure uses Edge AI in sensors to predict consumption patterns, detect power anomalies, optimize distribution, monitor grid health locally, and reduce energy waste. This has a direct impact on cost efficiency, predictive scaling, and real-time anomaly correction inside utility hardware networks.

Edge AI Frameworks & Software Tools

Edge AI development is powered by compact, high-performance frameworks built to run locally. Popular tools such as TensorFlow Lite, ONNX Runtime, PyTorch Mobile, and Edge Impulse help AI inference fit into small processors while staying fast and efficient. These frameworks support model conversion, real-time inference engines, and embedded deployment pipelines.

Developers can push updates over secure channels and optimize models for mobile AI chips, IoT hubs, and embedded robotics hardware. Businesses building smart products or AI-enabled IoT services should integrate edge-first frameworks early they deliver speed, privacy, bandwidth savings, and long-term cost benefits.

Choosing the right framework ensures reliability while opening room for scalable commercial Edge AI deployments across consumer and enterprise device fleets.

Model Optimization Techniques for the Edge

Edge AI models must be small, fast, and power-smart without losing accuracy. Techniques like quantization, pruning, model compression, and knowledge distillation reduce compute load and thermal strain. Quantization converts model weights into low-bit formats, shrinking size while speeding inference. Pruning removes less useful neural connections, keeping only what matters most. Knowledge distillation transfers intelligence from a larger model into a smaller edge model, preserving performance with fewer resources.

These methods help AI run smoothly inside cameras, sensors, home hubs, and mobile gadgets,ts even offline. Optimizing properly also reduces cloud processing costs, enabling businesses to deploy commercial edge AI workloads at scale without huge infrastructure overhead.

Hybrid Edge–Cloud Architecture

While Edge AI handles local inference, the cloud still plays a critical supporting role. Hybrid architecture blends both training, storage, and orchestration happen in the cloud, while decision-making runs locally. Devices make instant AI calls on-chip or near-chip, while summarized insights sync to a central dashboard when needed.

If an edge device faces limitations, the cloud can assist in non-urgent tasks or large-scale analytics. This balance creates real-time responsiveness without sacrificing scalability or centralized learning. Businesses launching commercial AI device ecosystems benefit from hybrid models that reduce latency, protect data, and scale smarter without overwhelming network bandwidth or backend compute budgets.

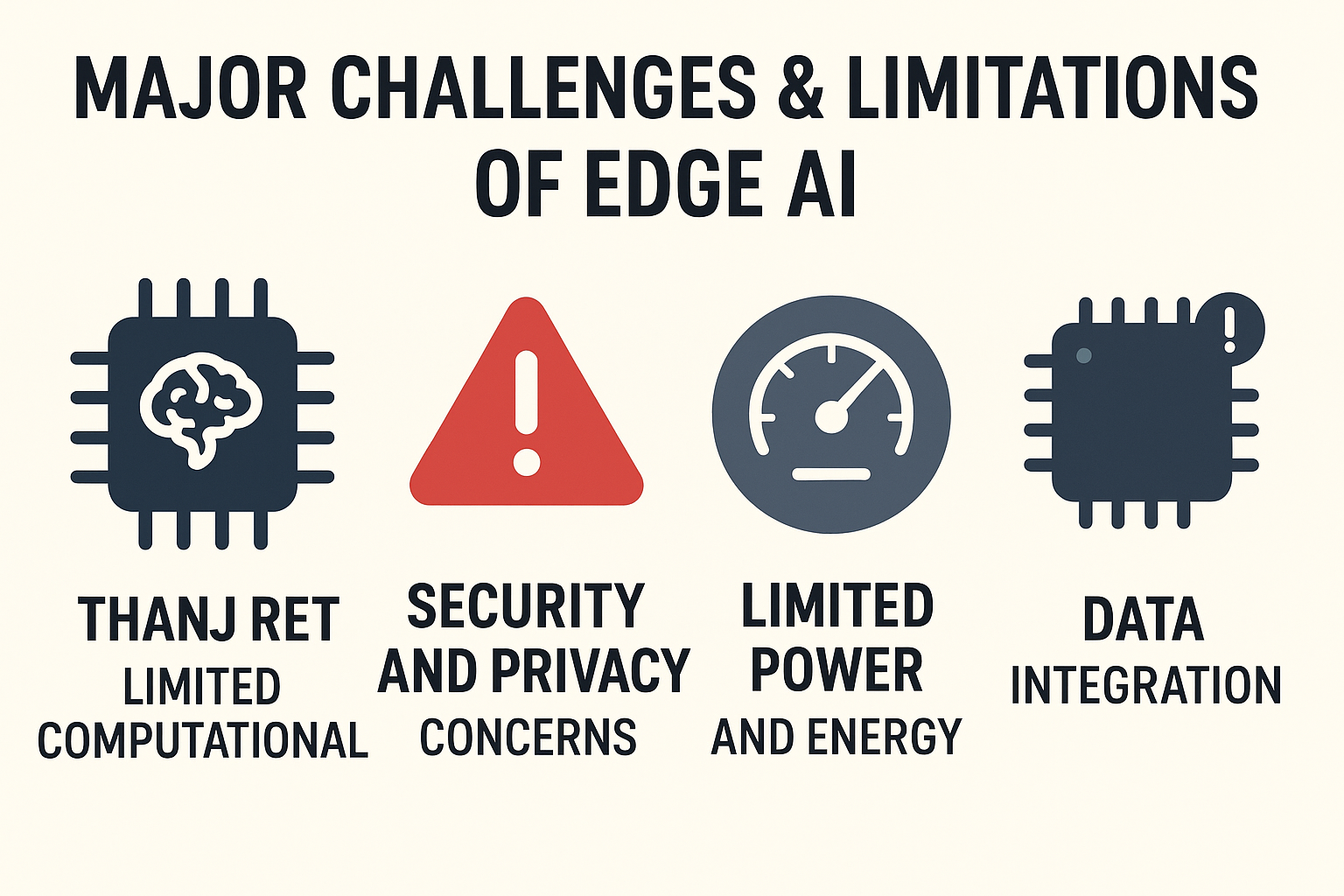

Major Challenges & Limitations of Edge AI

Edge AI isn’t perfect; it’s evolving fast, but trade-offs remain. Smaller hardware means processing limits, lower memory, and power constraints. AI models must be carefully tuned to avoid draining battery or overheating silicon.

Another challenge is maintaining model updates across thousands or millions of connected endpoints without disrupting uptime. Security risks also shift from cloud leakage to device-level vulnerabilities, model tampering, or unsafe firmware upgrades.

Despite these issues, most challenges are solvable through optimized hardware, secure deployment pipelines, compressed models, and hybrid edge-cloud collaboration. For businesses, planning for these limitations early ensures commercially reliable Edge AI systems that are both fast today and scalable tomorrow.

Future Trends of Edge AI in Smart Devices

Edge AI is moving toward smarter chips, faster 5G-enabled inference, federated learning on-device, and continuous local model improvements. We’ll see AI that updates intelligently without cloud bottlenecks, devices that learn user behavior offline, and micro models that operate in real-time across billions of endpoints.

Expect increased adoption in home automation, healthcare wearables, smart stores, industrial QC nodes, mobility hubs, and city infrastructure grids. Businesses entering Edge AI services or product development will benefit from investing now the edge-first wave is becoming a commercial necessity, not just a technical upgrade.

How Developers & Businesses Can Adopt Edge AI

Start small: optimize models early, test on real edge hardware, and use hybrid fallback for scale. Prioritize privacy-first local inference so users trust your device ecosystem. Automate firmware updates securely to maintain uptime at scale.

Evaluate use cases for home automation, wearable health sensors, industrial QC, retail analytics, or mobility intelligence, and deploy AI where real-time decisions matter most. Businesses should align product roadmaps with edge-AI silicon, optimized models, and commercially scalable inference engines.

Companies that move early will cut cloud bills, improve reliability, and deliver faster, safer, more intelligent products that feel personal, secure, and instant to end users.

Conclusion

Edge AI is revolutionizing smart devices by enabling real-time decisions, stronger privacy, and lower reliance on the cloud. From homes and wearables to healthcare, retail, and industry, it makes devices smarter, faster, and more efficient.

By adopting edge-first strategies, businesses and developers can deliver responsive, secure, and intelligent products. Edge AI isn’t just a technology upgrade; it’s the foundation for the next generation of connected, proactive devices.

Read More Informative Information At Mypasokey